Do we see slowed progress in large fields of science?

More published papers may, ironically, retard progress

Larger field = more progress?

Here’s a question to ponder: would you guess that larger scientific fields see a faster rate of progress, compared to smaller scientific fields?

Presumably, in larger scientific fields, there are more researchers, so more papers get published in that field each year. Surely more papers lead to a higher probability at least one of them contains a breakthrough?

A study tries to answer this question

This isn’t an easy question to answer, but a study attempted to do so: Slowed canonical progress in large fields of science

One of the things it predicted was that when the number of papers published in a field is very large, the rapid flow of new papers forces attention to already well-cited papers, and limits attention to less-established papers—even those with novel and potentially transformative ideas.

In order to test this, the study examined 1.8 billion citations among 90 million papers across 241 subjects in the Web of Science, published between 1960 and 2014. Here’s one of the things it found:

The Y axis shows something called a “Gini coefficient.”1 In this study, it’s used to measure the “inequality” in shares of citations; a higher Gini coefficient means that a smaller proportion of papers gets the bulk of citations for a certain subject over a certain period of time, aka a “subject-year.”

Each dot in the graph represents a subject-year in the Web of Science, and the X axis is the logged (base 10) number of papers published in the subject-year (N). So as you go from left to right on the X axis, the number of papers published increases dramatically.

All this is to say: in years when a subject had more published papers, or the field was “large,” there was a higher Gini coefficient, aka there was greater “inequality” when it came to the share of citations, or the most-cited papers garnered a disproportionate share of new citations.

For example, when the field of Electrical and Electronic Engineering published ∼10,000 papers a year, the top 0.1% most-cited papers collected 1.5% of total citations. When the field grew to 100,000 published papers per year, the top 0.1% received 5.7% of citations. The bottom 50% least-cited papers, in contrast, dropped from 43.7% of citations to ~20%, as the number of papers published grew from 10,000 to 100,000 per year.

The authors of this study worry that rather than causing faster turnover of paradigms, a deluge of new publications “entrenches” top-cited papers, preventing new work from rising:

we find a deluge of papers does not lead to turnover of central ideas in a field, but rather to ossification of canon.

Why would this happen?

The authors offer up some possible explanations for what might be going on:

First, when many papers are published within a short period of time, scholars are forced to resort to heuristics to make continued sense of the field. Rather than encountering and considering intriguing new ideas each on their own merits, cognitively overloaded reviewers and readers process new work only in relationship to existing exemplars (16–18).

So maybe, in larger fields, researchers are overwhelmed by the amount of information, and resort to more mental shortcuts to process new work.

Another possibility is that with so many papers in a field, researchers just may not come across certain work, especially work that’s not published in the “best” journals.

Moreover, an idea that’s too novel, and doesn’t fit within current schemas, might be less likely to be published, read, or cited. It might be too “weird” to be taken seriously:

Faced with this dynamic, authors are pushed to frame their work firmly in relationship to well-known papers, which serve as “intellectual badges” (19) identifying how the new work is to be understood, and discouraged from working on too-novel ideas that cannot be easily related to existing canon.

Here’s another possibility: perhaps larger fields also tend to have larger teams on average and perhaps larger research teams don’t disrupt science as much as smaller teams?

Ossification of canon

The study also found that the most-cited papers maintained their number of citations year over year when fields were large, while all other papers’ citation counts decay.

Specifically, for each paper they looked at how much less they were cited compared to the previous year; or how the citation rate “decayed.” In years when fewer papers were published, aka the field was small, the top most-cited papers saw a similar “citation decay rate” as less-cited papers.

In larger fields, however, the most-cited papers showed a much lower citation decay rate compared to less-cited papers. In very large field-years, with about 100,000 papers published, the most-cited papers on average saw no decline in their numbers of citations received year over year.

Additionally, the probability of a paper ever reaching the top 0.1% most cited in its field, shrank when it was published in the same year as many others. This held true across fields in the same year, and across years in individual fields (see A below).

The amount of time it took for a paper to “break through” and become well cited, also differed for large fields. In the figure above, B presents the median time in years for an article to break into the field’s canon, conditional on the paper ever becoming one of the top cited in its field.

When a field is small, papers rise slowly over time into the top 0.1% most cited, and a successful paper is predicted to take ~9 years to reach the top 0.1% most cited.2 Papers entering the canon in the largest fields, by contrast, shoot quickly to the top; they’re predicted to take a median of less than a year to reach the top 0.1%.

Maybe in smaller fields, more scholars discover new work by actually reading across the literature, including both highly cited and less cited work, whereas in larger fields, scholars mainly look at the top most cited papers?

All this suggests that as fields grow larger, there’s a kind of ossification or crystallization of the top-cited papers, and less churn in the order of the most-cited papers. Is this a good thing?

Incentives

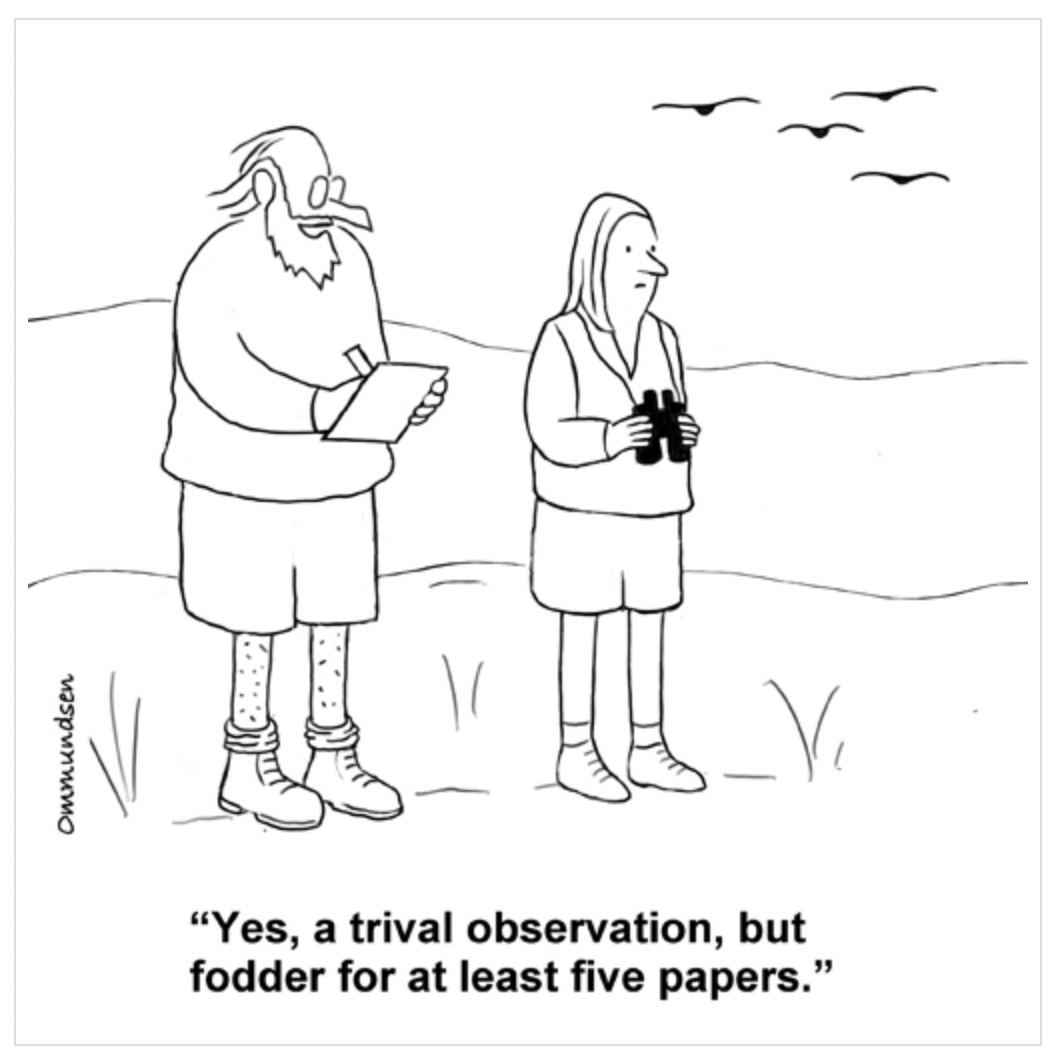

What’s sad is that the academic environment encourages a “more publications are better” view. Academic researchers are rewarded based on the number of publications they produce; they need to “publish or perish” in order to get tenure or promotions.

“Quality” is also predominantly judged quantitatively. Citation counts are used to measure the importance of individuals (7), teams (8), and journals (9) within a field.

Critiques of the study

This study is interesting, but it’s not immune to critique. It’s possible there’s something(s) wrong with either their data or analyses, for example.

Plus, there’s more than one way to interpret their results. For one, the study was at the level of fields and large subfields; one could argue that progress now occurs at lower subdisciplinary levels, and to examine that would require more precise methods for classifying papers.

The researchers do address this with the following:

But note that the fields and subfields identified in the Web of Science correspond closely to real-world self-classifications of journals and departments. Established scholars transmit their cognitive view of the world to their students via field-centric reading lists, syllabi, and course sequences, and field boundaries are enforced through career-shaping patterns of promotion and reward.

Also, it may be that progress still occurs, even though the most-cited articles remain constant:

While the most-cited article in molecular biology (22) was published in 1976 and has been the most-cited article every year since 1982, one would be hard pressed to say that the field has been stagnant, for example.

We certainly can’t say there’s been no progress. On the other hand:

But recent evidence (23) suggests that much more research effort and money are now required to produce similar scientific gains—productivity is declining precipitously.

I don’t know what the correct stance on this is, but at the very least we should consider the possibility that we’re missing new breakthroughs because we’re locked into overworked areas of study.

What’s the solution?

Let’s say that what this study showed, and the authors’ interpretations of it, were true. What’s the solution?

The authors offer up some possibilities:

A clearer hierarchy of journals with the most-prestigious, highly attended outlets devoting pages to less canonically rooted work could foster disruptive scholarship and focus attention on novel ideas. Reward and promotion systems, especially at the most prestigious institutions, that eschew quantity measures and value fewer, deeper, more novel contributions could reduce the deluge of papers competing for a field’s attention while inspiring less canon-centric, more innovative work.

I think it’s fine for journals to try different things, though I’m a bit skeptical of this “hierarchy of journals” approach. And though it would be great to “eschew quantity measures and value fewer, deeper, more novel contributions,” who gets to decide what’s deep and novel?

They also suggest:

A widely adopted measure of novelty vis a vis the canon could provide a helpful guide for evaluations of papers, grant applications, and scholars.

Even if we could agree on a “widely adopted measure of novelty,” how likely is that measure going to correlate with actual novelty? Does the “majority” in a field, or even the most prestigious science journals, have a good track record of determining truly novel or significant work?3

The authors also suggest:

Revamped graduate training could push future researchers to better appreciate the uncomfortable novelty of ideas less rooted in established canon.

Eh, yeah sure, we can certainly improve graduate training… But not if it means some kind of centralized revamp of graduate training.

I think we can start with getting rid of censorship, which we saw happen on a massive scale during the COVID era. It might also help to remove the stigma of certain types of research, or encourage the notion that no idea is off limits.

Maybe the problem is less the number of researchers we have, but the types of researchers, and incentives, we have. Are there market solutions that we’re not seeing?

Some have also argued that the way most academic scientists get funding- by writing grant proposals to government agencies like the NSF or NIH for example- invites them to justify their research by either exaggerating or picking predictable projects. Perhaps it would therefore be better to fund people, instead of projects.

I don’t know what the solution is, but people, institutions, etc. should experiment and try different things.

Have you got any ideas? Leave them in the comments below.

You may have heard of this in the context of income inequality in countries.

When published in the same year as 1,000 other papers in the field.

Reminder: Hans Krebs submitted his work on the Krebs cycle to the journal Nature, but they rejected it because they had too much of a backlog on submissions. Krebs would later win the Nobel Prize for that work. Then there’s Kary Mullis who submitted his work on PCR to Nature, as well as Science. They both rejected it. Mullis would eventually win the Nobel Prize for that work. There are other examples like this.

Your ideas mesh with those of Eugyppius in these articles: What is wrong with The Science?

Reflections on the unnoticed revolution in our academic institutions that took root in the middle of the 20th century and is steadily wringing all that is original, interesting and good out of them.

https://www.eugyppius.com/p/what-is-wrong-with-the-science and https://www.eugyppius.com/p/more-on-what-is-wrong-with-the-science

This is pretty interesting. It seems as if science is not infallible to similar social hierarchies as other fields in that there tends to be a few who do extremely well while others scatter about with much less. It's similar to YouTube or any social media platform, including Substack, where most authors will struggle to get readers and subscribers while larger ones will continue to grow. Essentially, many things appear to follow a Pareto distribution much to the chagrin of the smaller fish.

I think what makes science rather interesting is the dynamic between the public, researchers, and industry. Consider that most of the research that gets funding tends to be research that the public wants such as cancer, HIV, and Alzheimer's research. The field of Alzheimer's seems like it needs to look back into the literature given that one of the most widely cited articles actually appeared to have been subject to blot manipulation. And yet, at the same time two immunotherapies have been rushed for approval with limited information because the public demands a treatment for Alzheimer's.

This also comes with the fact that the media will take a study and misrepresent or misinterpret its findings, which can then sway public perception even further.